The application note outlines how the eego™ system supports high-quality EEG measurements for sleep research across various lab setups. It summarizes key setup considerations, including cap choice, channel density, and equipment configuration. A user testimonial highlights the system’s reliability, comfort, and integration of multiple physiological signals.

Aim

This application note aims to provide an introduction to the methods required to synchronize with third-party systems including sensors, presentation software, and virtual reality devices.

Background

Synchronizing EEG with external systems is essential in brain research, as it enables the study of neural responses to stimuli and the relationship between brain activity and other physiological processes. To extract meaningful insights from these integrations, precise timing between systems is critical.

Two primary methods are used for event-based synchronization: transistor-transistor logic (TTL) and Lab Streaming Layer (LSL). TTL synchronization uses hardware connections and voltage variations to send triggers directly into the EEG amplifier through the analog trigger port. This approach offers high precision with minimal latency and jitter, but it requires appropriate hardware and limits mobility due to cabling constraints.

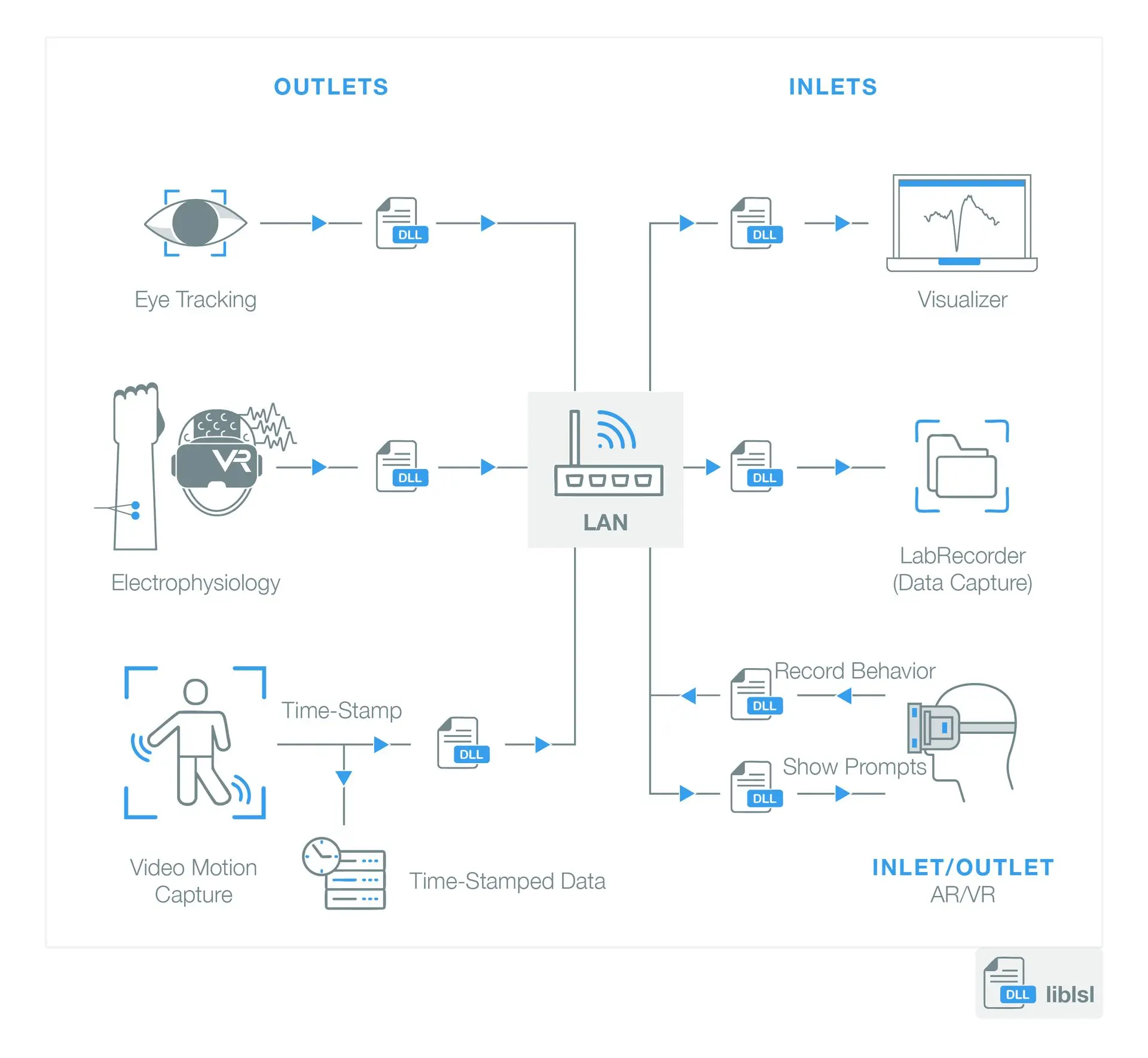

In contrast, LSL is a flexible, open-source software framework for real-time data synchronization and communication over a network. It supports multimodal data collection by synchronizing multiple data streams across devices and platforms. LSL also includes a simple API for sending event markers to time-lock stimuli to physiological responses. However, its precision can vary depending on system configuration, and performance may be affected by network conditions. LSL can also stream data from EEG and other systems to be collected by an inlet such as LabRecorder as indicated in Figure 1.

Figure 1. Schematic of LSL integrations. LSL outlets for either events or data stream shown on the left, and inlets for capturing LSL events (eego) or data streams (e.g., LabRecorder).

Best Practices for LSL and TTL

In order to maximize precision of LSL synchronization, it is ideal to minimize other network usage, use a dedicated router, and validate experimental setups to measure real-world latency and jitter. In any recording setup, latency due to the network is inevitable, but after correction for this offset, sub-millisecond jitter can be expected [1,2]. Additional information on LSL and the software to implement it are available [3,4]. For either LSL or TTL, an experimental paradigm should be characterized using a ground-truth reference for timing. For instance, photodiodes and microphones can be used to characterize the latency in onset time between the trigger and a visual or auditory stimulus. For LSL, this known latency can be set in the eego software. In all experiments, it is recommended to start the EEG recording prior to any external synchronizing trigger sequences to ensure they are captured in the data.

Integration With eego™

TTL communication with eego amplifiers can be achieved using either DB25 or BNC adapters included with an eego system or with a custom adapter for certain systems such as Tobii Pro Glasses. Input voltage must be at least 3.3 V and preferably 5V with a rising voltage flank. The trigger pulse length should be at least 2-3 times the reciprocal of the EEG sampling rate for reliable detection. In order to receive LSL events in eego, first go to Application options → Network Operation → Network Events and check “Enable Network Events”. LSL events must be sent in either 32-bit integers or string format to be recognized by eego. To stream data out from eego, check the box “Enable LSL EEG streaming”.

Existing and Validated Integrations

Modality |

Sync Mode (LSL/TTL) |

Logic |

Eye Tracking

|

LSL [5] LSL (Pro Fusion, Glasses Pro 3) [6] or TTL (Glasses Pro 2/3) TTL (ETVision) |

Customizable network events in Pupil Labs software. |

Wireless EMG

|

TTL [7] |

|

Virtual Reality

|

LSL [8] LSL LSL, custom TTL LSL |

Customizable network events or receive stream from eego for all. |

Stimulus Presentation [9]

|

TTL, LSL [10] TTL, LSL [11] TTL (Chronos device for USB-to-TTL), LSL [12] |

Customizable network events transmitted via TTL or LSL. Parallel port or USB-to-TTL devices possible. |

Technical Challenges

While multi-modal data as described here can offer rich insights about human behavior in naturalistic environments, there are several technical challenges introduced. First, these systems, being designed for use in isolation, do not have synchronized sampling clocks, and sampling rates can vary widely. For example, eego systems can be configured to sample between 250 Hz and 16 kHz, while high-frequency eye tracking systems are in the range of 120 Hz [13]. Therefore, while physological actions such as fixation on a target may be useful for placing an event marker in EEG, the precision offered may not be sufficient for applications requiring very high temporal resolution, such as event related potential (ERP) analysis. Second, the lack of synchronization between clocks of different devices introduces the potential for clock drift over the course of long recording sessions. Clock drift refers to the gradual mismatch between the internal clocks of different devices, causing their data streams to become increasingly misaligned over time. With TTL synchronization, it is therefore recommended to use synchronization events throughout a session rather than relying on only one event at the beginning of a recording. An example of such methods for synchronizing eye-tracker and EEG data can be found here [14].

Fortunately, LSL does offer some mitigation of these issues. Clock drift is mitigated as LSL checks for offsets between streams and corrects for them – by default, every 5 seconds [1]. Recording via LSL also offers several advantages due to the use of the XDF (Extensible Data Format). XDF files are very flexible, incorporating data from devices with different sampling rates and channel counts, and stores raw time-stamped data for offline data processing.

References & External Links

- Kothe, C., Shirazi, S. Y., Stenner, T., Medine, D., Boulay, C., Grivich, M. I., Artoni, F., Mullen, T., Delorme, A., & Makeig, S. (2025). The lab streaming layer for synchronized multimodal recording. Imaging Neuroscience.

- Merino-Monge, M., Molina-Cantero, A. J., Castro-García, J. A., & Gómez-González, I. M. (2020). An easy-to-use multi-source recording and synchronization software for experimental trials. IEEE Access, 8, 200618-200634.

- LSL homepage: https://labstreaminglayer.org/

- LSL code: https://github.com/labstreaminglayer

- Tobii LSL: GitHub - labstreaminglayer/App-TobiiPro

- Pupil Labs LSL: Neon - Lab Streaming Layer - Pupil Labs Docs

- Delsys Application Note: Synchronized recording of EEG and Delsys wireless EMG | ANT Neuro Academy

- Unity LSL: GitHub - labstreaminglayer/LSL4Unity: A integration approach of the LabStreamingLayer Framework for Unity3D

- Stimulus Presentation Application Note: Using stimulus presentation packages with your eego™ system | ANT Neuro Academy

- Psychopy LSL: GitHub - kaczmarj/psychopy-lsl: Use LabStreamingLayer to handle triggers with PsychoPy.

- Presentation LSL: Lab Streaming Layer

- E-Prime LSL package GitHub - PsychologySoftwareTools/eprime3-lsl-package-file: E-Prime 3.0 package to enable LSL.

- Ladouce, S., Donaldson, D. I., Dudchenko, P. A., & Ietswaart, M. (2017). Understanding minds in real-world environments: toward a mobile cognition approach. Frontiers in human neuroscience, 10, 694.

- Xue, J., Quan, C., Li, C., Yue, J., & Zhang, C. (2017). A crucial temporal accuracy test of combining EEG and Tobii eye tracker. Medicine, 96(13), e6444.

DISCLAIMER

All information provided in this document is intended as a summary only.

For detailed product related information please always consult the latest version of the respective product’s user manual. This document is not intended to replace the user documentation. For indications on individual feature certification status (clinical vs. research only) please refer to the aforementioned user documentation.

We have attempted to write this document as accurately as possible. However, mistakes are bound to occur, and we reserve the right to make changes whenever needed or whenever new information becomes available.

All product names and brand names in this document are trademarks or registered trademarks of their respective holders.

© Copyright 2025 eemagine Medical Imaging Solutions GmbH.

No part of this document may be photocopied or reproduced or transmitted in any way without prior written consent from eemagine Medical Imaging Solutions GmbH.